State-of-the-art performance in disease discovery

DTrace addresses the limitations of existing encoder–decoder frameworks by reliably capturing critical diagnostic details that are often overlooked.

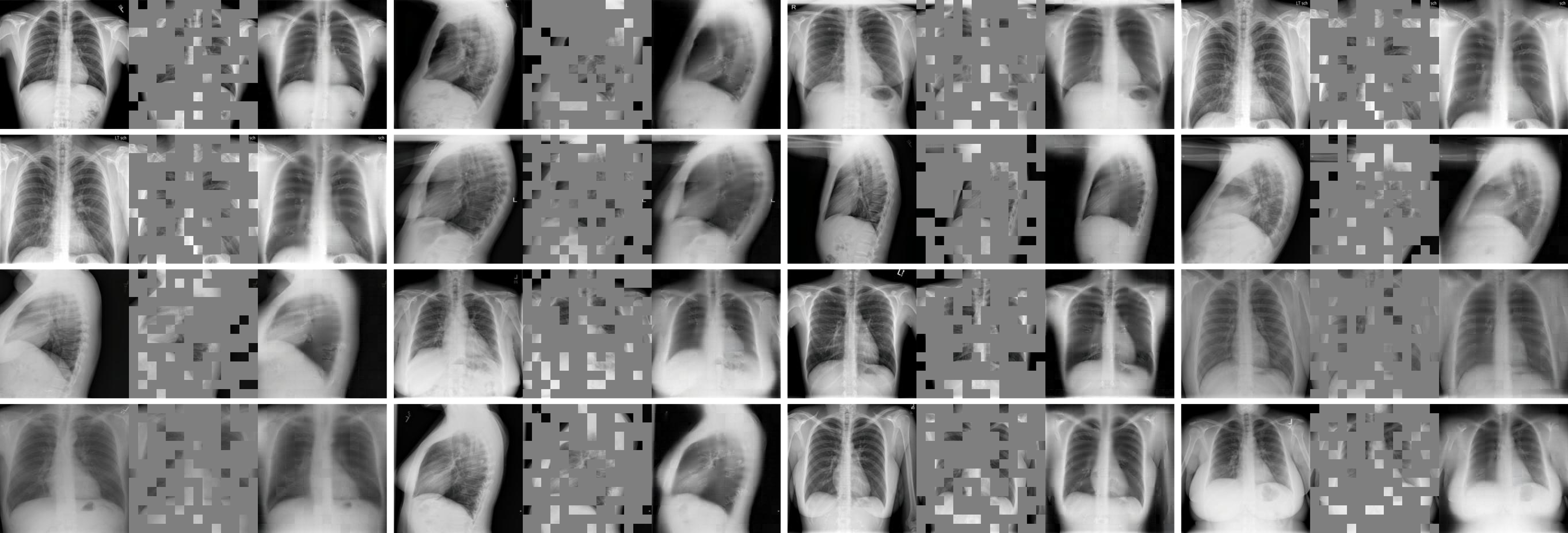

In addition, DTrace demonstrates strong capabilities in image reconstruction. Even when reconstructing from images with 75% of pixels masked, DTrace produces cohesive and semantically faithful outputs that preserve both morphological and clinical consistency.